AWS Lambda Runtime Execution Model: Understanding Task Scheduling and Event Loop Behavior

A detailed analysis of AWS Lambda's execution model, exploring the Runtime API, Node.js event loop integration, and why perceived 'silent crashes' are actually intended behavior in Lambda's optimized execution environment.

The recent viral discussion around AWS Lambda’s alleged “silent crash” behavior has sparked significant debate within the serverless community. The core assertion suggests that AWS Lambda’s Node.js runtime silently terminates execution during asynchronous operations, particularly when making outbound HTTPS requests from within VPC-configured functions. However, this characterization fundamentally misunderstands Lambda’s execution model and represents a conceptual gap between traditional server-based applications and serverless function lifecycle management.

This phenomenon isn’t a crash or runtime flaw but rather the intended behavior of Lambda’s optimized execution environment. Understanding this distinction requires examining Lambda’s Runtime API, the Node.js event loop integration, and the fundamental differences between persistent server processes and ephemeral function execution contexts.

Lambda’s Execution Architecture and Runtime API Foundation

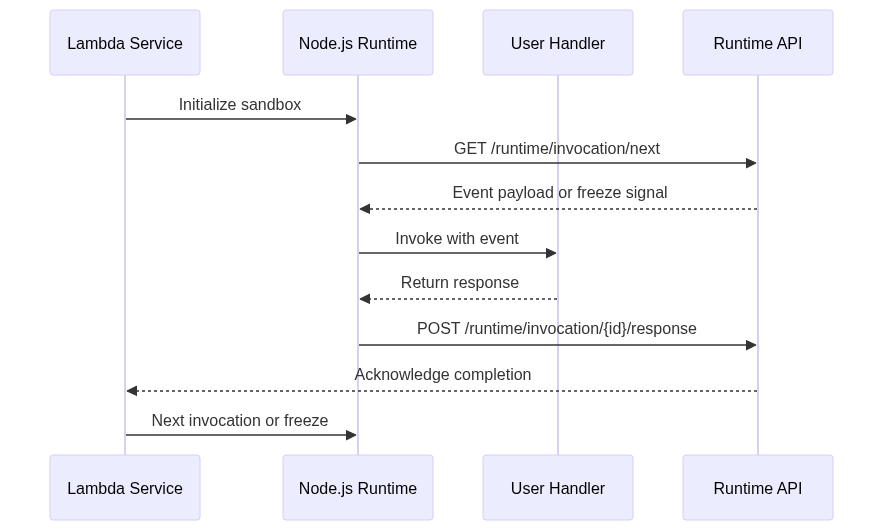

Lambda operates through a sophisticated Runtime API that manages function lifecycle through HTTP-based endpoints served at a link-local address. This API orchestrates the communication between Lambda’s managed infrastructure and user function code through two primary endpoints: /runtime/invocation/next and /runtime/invocation/{RequestId}/response.

The Runtime API functions as a state machine where functions continuously poll the /next endpoint for incoming requests. Upon receiving an invocation, the runtime processes the event through the user’s handler function, then submits the response via the /response endpoint before returning to await the next invocation. This cycle continues until Lambda decides to freeze or terminate the execution environment.

The critical distinction lies in Lambda’s approach to function completion. Once a handler returns its response, Lambda considers the invocation complete and immediately begins the process of either preparing for the next invocation or freezing the execution environment. Any asynchronous operations initiated but not awaited by the handler become orphaned tasks that may never execute to completion.

Node.js Runtime Implementation and Event Loop Integration

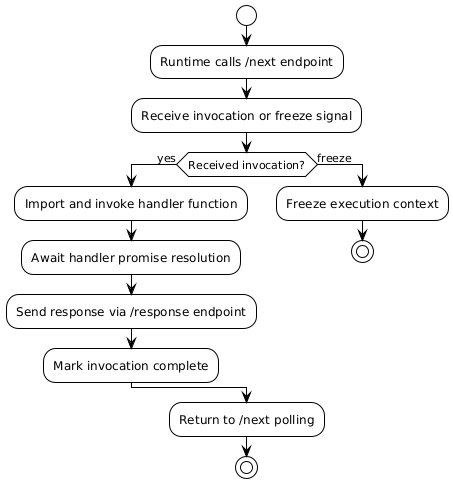

The Node.js runtime interface client serves as the bridge between Lambda’s Runtime API and user function code. This client implements a precise execution pattern that reveals why the perceived “silent crash” occurs:

The runtime implementation awaits the handler’s promise resolution, but once that promise resolves and returns a value, the runtime immediately submits the response and considers the invocation complete. This behavior aligns with Lambda’s design philosophy of rapid scaling and resource optimization.

When examining the problematic code pattern, the issue becomes apparent:

1

2

3

4

5

6

7

8

async sendTestEmail() {

this.eventEmitter.emit(events.USER_REGISTERED, {

name: 'Joe Bloggs',

email: 'email@example.com',

token: 'dummy-token-123',

});

return { message: 'Manual test triggered' };

}

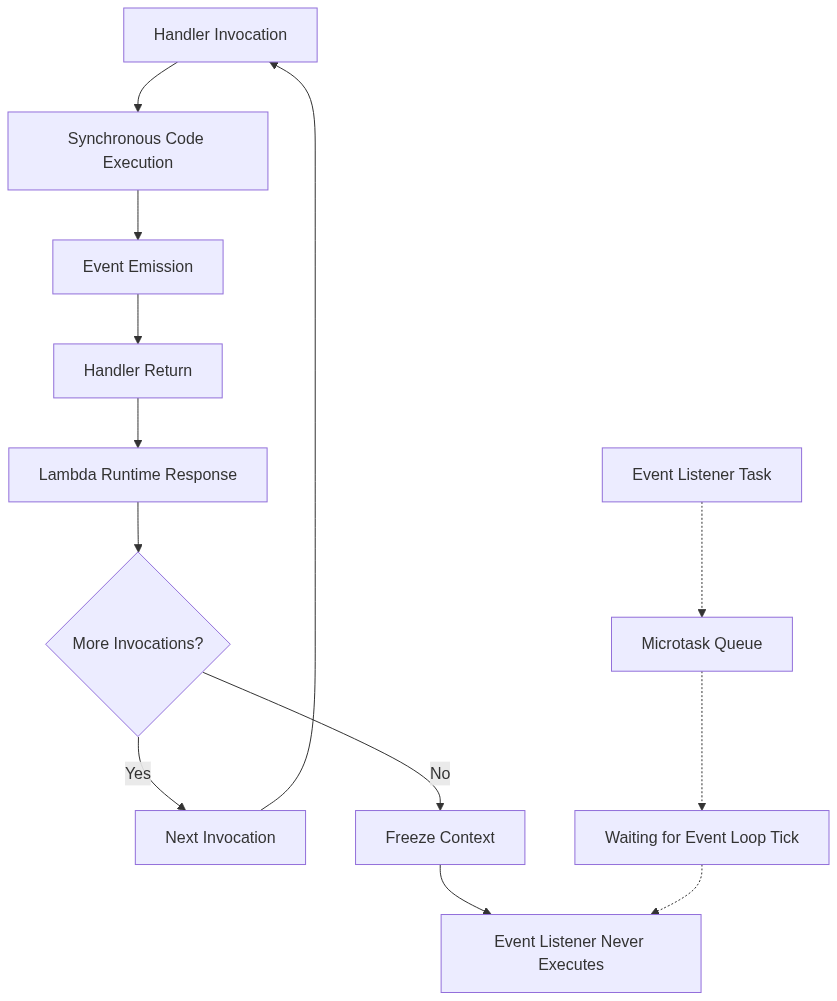

The event emission occurs synchronously, but the event listener that should process the USER_REGISTERED event operates asynchronously. Since the handler returns immediately after emitting the event, Lambda’s runtime considers the invocation complete and may freeze the execution context before the event listener has an opportunity to execute.

Event Loop Mechanics and Task Scheduling in Lambda Context

Node.js relies on an event loop that manages the execution of callbacks, promises, and other asynchronous operations through different phases. Under normal server conditions, the event loop continues running indefinitely, processing tasks from various queues as they become available. However, Lambda’s execution model introduces a controlled interruption to this process.

The event listener registration creates a microtask that gets queued for execution during the next event loop tick. However, since the handler returns synchronously after event emission, Lambda’s runtime submits the response and potentially freezes the context before the event loop can process the queued listener task.

This behavior isn’t limited to event emitters but affects any asynchronous operation not explicitly awaited by the handler function. The Lambda execution model prioritizes rapid response times and efficient resource utilization over accommodating fire-and-forget asynchronous patterns common in long-running server applications.

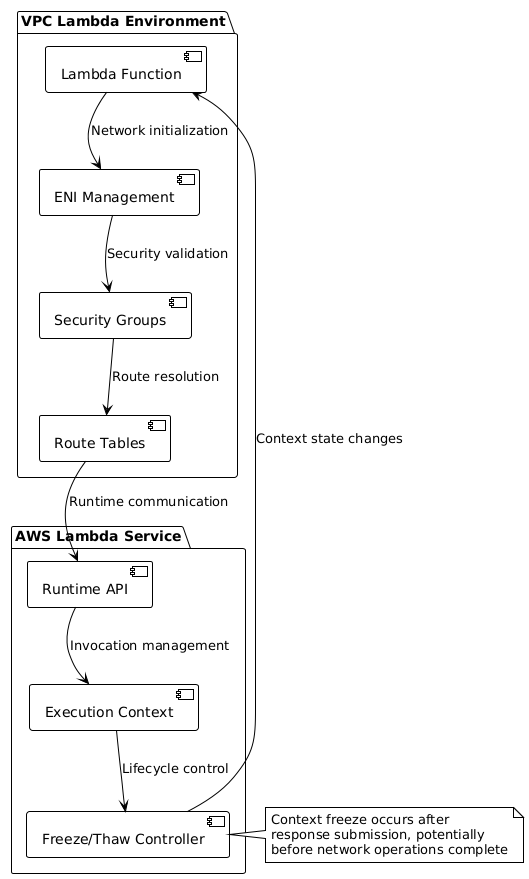

VPC Configuration Impact on Execution Timing

VPC-configured Lambda functions introduce additional complexity through network initialization overhead and connection management. When Lambda functions operate within a VPC, they must establish network interfaces and routing tables, which can extend the initialization time and affect the timing of subsequent operations.

The interaction between VPC networking and the event loop timing can create scenarios where asynchronous operations appear to fail inconsistently. Network latency and connection establishment times can influence whether queued tasks execute before the Lambda runtime freezes the context, leading to seemingly random success or failure patterns.

Correct Approaches for Asynchronous Processing in Lambda

Understanding Lambda’s execution model enables the implementation of appropriate patterns for asynchronous processing. Three primary approaches provide reliable solutions while working within Lambda’s architectural constraints.

To get into depth for the options check this link

Architectural Recommendations for Serverless Async Patterns

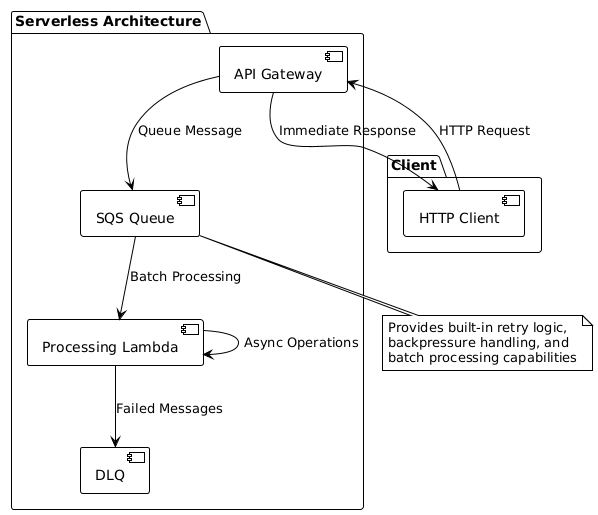

Rather than adapting traditional server patterns to Lambda’s execution model, the optimal approach involves redesigning application architecture to align with serverless principles. For the use case described in the original problem, direct API Gateway to SQS integration provides superior reliability and scalability characteristics.

This pattern separates the immediate HTTP response from the asynchronous processing workflow, allowing each component to operate within its optimal execution context. The HTTP endpoint returns immediately while SQS queues the processing task for dedicated Lambda functions optimized for batch processing, retry logic, and error handling.

Understanding Lambda’s Design Philosophy

The fundamental misunderstanding in characterizing Lambda’s behavior as a “silent crash” stems from expecting Lambda to function identically to traditional server environments. Lambda’s design explicitly optimizes for rapid scaling, efficient resource utilization, and predictable execution patterns rather than accommodating arbitrary async patterns.

This opinionated approach enables Lambda’s core value propositions: automatic scaling, minimal operational overhead, and cost-efficient execution. Attempting to force traditional server patterns onto Lambda’s execution model creates friction and unreliable behavior patterns that work against the platform’s architectural intent.

The execution model described represents Lambda’s strength rather than a limitation. By understanding and working within these constraints, developers can build more reliable, scalable, and cost-effective serverless applications that leverage Lambda’s true capabilities rather than fighting against its design principles.

The key insight is recognizing that serverless function execution differs fundamentally from persistent server processes. Functions should complete all necessary work before returning, use appropriate async patterns when extended processing is required, or delegate long-running operations to more suitable architectural components like SQS-triggered processors or Step Functions for orchestration.

This understanding transforms the perceived “silent crash” from a mysterious runtime bug into a predictable behavior that can be designed around effectively, resulting in more robust and maintainable serverless applications that align with AWS Lambda’s architectural philosophy and execution model.