Day 58: Advanced Load Balancing in Operating Systems

1. Introduction

Advanced load balancing is a crucial component in modern operating systems and distributed systems that ensures optimal resource utilization and system performance. This brief article explores sophisticated load balancing techniques and scheduling strategies. Load balancing is essential for systems that handle a large number of tasks or requests, such as web servers, cloud computing platforms, and distributed databases.

The primary goal of load balancing is to distribute workloads across multiple processing units or systems to achieve optimal resource utilization, minimize response time, and prevent any single resource from becoming a bottleneck. By implementing advanced load balancing techniques, operating systems can ensure that tasks are executed efficiently, even under heavy load conditions.

2. Load Balancing Fundamentals

Core concepts that form the foundation of advanced load balancing:

-

Load Metrics: These are quantifiable measurements used to determine system load. Includes CPU usage, memory utilization, I/O operations, network bandwidth, and process queue length. Each metric provides different insights into system performance. For example, high CPU usage might indicate that a server is under heavy computational load, while high memory utilization might suggest that the server is handling large datasets.

-

Resource Allocation: The process of assigning available system resources to tasks based on their requirements and priorities. This involves both static allocation at process creation and dynamic reallocation during execution. Effective resource allocation ensures that tasks receive the necessary resources to complete their work without causing resource contention.

-

Load Distribution: The mechanism of spreading workload across multiple processing units or systems to achieve optimal resource utilization and minimize response time. Load distribution can be achieved through various techniques, such as round-robin scheduling, least-connections, and weighted distribution.

Understanding these core concepts is essential for designing and implementing effective load balancing strategies. By monitoring load metrics and dynamically allocating resources, operating systems can ensure that tasks are executed efficiently and that system resources are utilized optimally.

3. Advanced Scheduling Strategies

3.1 Dynamic Priority Scheduling

Dynamic priority scheduling involves adjusting the priority of tasks based on their behavior and system conditions. This allows the scheduler to prioritize tasks that are more critical or have been waiting longer, ensuring that they are executed promptly.

Here’s an implementation of a dynamic priority scheduler:

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

#include <unistd.h>

typedef struct {

int process_id;

int initial_priority;

int dynamic_priority;

int cpu_burst;

int waiting_time;

} Process;

typedef struct {

Process* processes;

int count;

pthread_mutex_t lock;

} ProcessQueue;

ProcessQueue* create_queue(int size) {

ProcessQueue* queue = malloc(sizeof(ProcessQueue));

queue->processes = malloc(sizeof(Process) * size);

queue->count = 0;

pthread_mutex_init(&queue->lock, NULL);

return queue;

}

void adjust_priority(Process* process) {

// Dynamic priority adjustment based on waiting time

if (process->waiting_time > 10) {

process->dynamic_priority =

process->initial_priority + (process->waiting_time / 10);

}

}

Process* select_next_process(ProcessQueue* queue) {

pthread_mutex_lock(&queue->lock);

Process* selected = NULL;

int highest_priority = -1;

for (int i = 0; i < queue->count; i++) {

adjust_priority(&queue->processes[i]);

if (queue->processes[i].dynamic_priority > highest_priority) {

highest_priority = queue->processes[i].dynamic_priority;

selected = &queue->processes[i];

}

}

pthread_mutex_unlock(&queue->lock);

return selected;

}

int main() {

ProcessQueue* queue = create_queue(10);

// Add sample processes

Process p1 = {1, 5, 5, 10, 0};

Process p2 = {2, 3, 3, 15, 5};

Process p3 = {3, 4, 4, 8, 12};

queue->processes[queue->count++] = p1;

queue->processes[queue->count++] = p2;

queue->processes[queue->count++] = p3;

// Simulate scheduling

for (int i = 0; i < 3; i++) {

Process* next = select_next_process(queue);

printf("Selected Process ID: %d, Priority: %d\n",

next->process_id, next->dynamic_priority);

}

free(queue->processes);

free(queue);

return 0;

}

In this example, the adjust_priority function adjusts the priority of a process based on its waiting time. The select_next_process function selects the process with the highest dynamic priority for execution. This ensures that processes that have been waiting longer are given higher priority, reducing their waiting time and improving overall system performance.

3.2 Load Balancing Algorithm Implementation

Load balancing algorithms are used to distribute tasks across multiple servers or processing units. One common approach is to assign tasks to the least loaded server, ensuring that no single server becomes a bottleneck.

Here’s an implementation of a load balancing algorithm:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <pthread.h>

#define MAX_SERVERS 10

#define MAX_TASKS 100

typedef struct {

int server_id;

double current_load;

int capacity;

pthread_mutex_t lock;

} Server;

typedef struct {

int task_id;

int resource_requirement;

} Task;

typedef struct {

Server servers[MAX_SERVERS];

int server_count;

pthread_mutex_t global_lock;

} LoadBalancer;

LoadBalancer* initialize_load_balancer(int server_count) {

LoadBalancer* lb = malloc(sizeof(LoadBalancer));

lb->server_count = server_count;

pthread_mutex_init(&lb->global_lock, NULL);

for (int i = 0; i < server_count; i++) {

lb->servers[i].server_id = i;

lb->servers[i].current_load = 0.0;

lb->servers[i].capacity = 100;

pthread_mutex_init(&lb->servers[i].lock, NULL);

}

return lb;

}

int least_loaded_server(LoadBalancer* lb) {

double min_load = 1.0;

int selected_server = -1;

pthread_mutex_lock(&lb->global_lock);

for (int i = 0; i < lb->server_count; i++) {

double load_ratio = lb->servers[i].current_load /

lb->servers[i].capacity;

if (load_ratio < min_load) {

min_load = load_ratio;

selected_server = i;

}

}

pthread_mutex_unlock(&lb->global_lock);

return selected_server;

}

void assign_task(LoadBalancer* lb, Task* task) {

int server_id = least_loaded_server(lb);

if (server_id >= 0) {

Server* server = &lb->servers[server_id];

pthread_mutex_lock(&server->lock);

server->current_load += task->resource_requirement;

printf("Task %d assigned to Server %d (Load: %.2f)\n",

task->task_id, server_id, server->current_load);

pthread_mutex_unlock(&server->lock);

}

}

int main() {

LoadBalancer* lb = initialize_load_balancer(3);

// Simulate task assignment

Task tasks[5] = {

{1, 20}, {2, 30}, {3, 15}, {4, 25}, {5, 10}

};

for (int i = 0; i < 5; i++) {

assign_task(lb, &tasks[i]);

}

// Cleanup

for (int i = 0; i < lb->server_count; i++) {

pthread_mutex_destroy(&lb->servers[i].lock);

}

pthread_mutex_destroy(&lb->global_lock);

free(lb);

return 0;

}

In this example, the least_loaded_server function selects the server with the lowest load ratio, and the assign_task function assigns the task to that server. This ensures that tasks are distributed evenly across servers, preventing any single server from becoming overloaded.

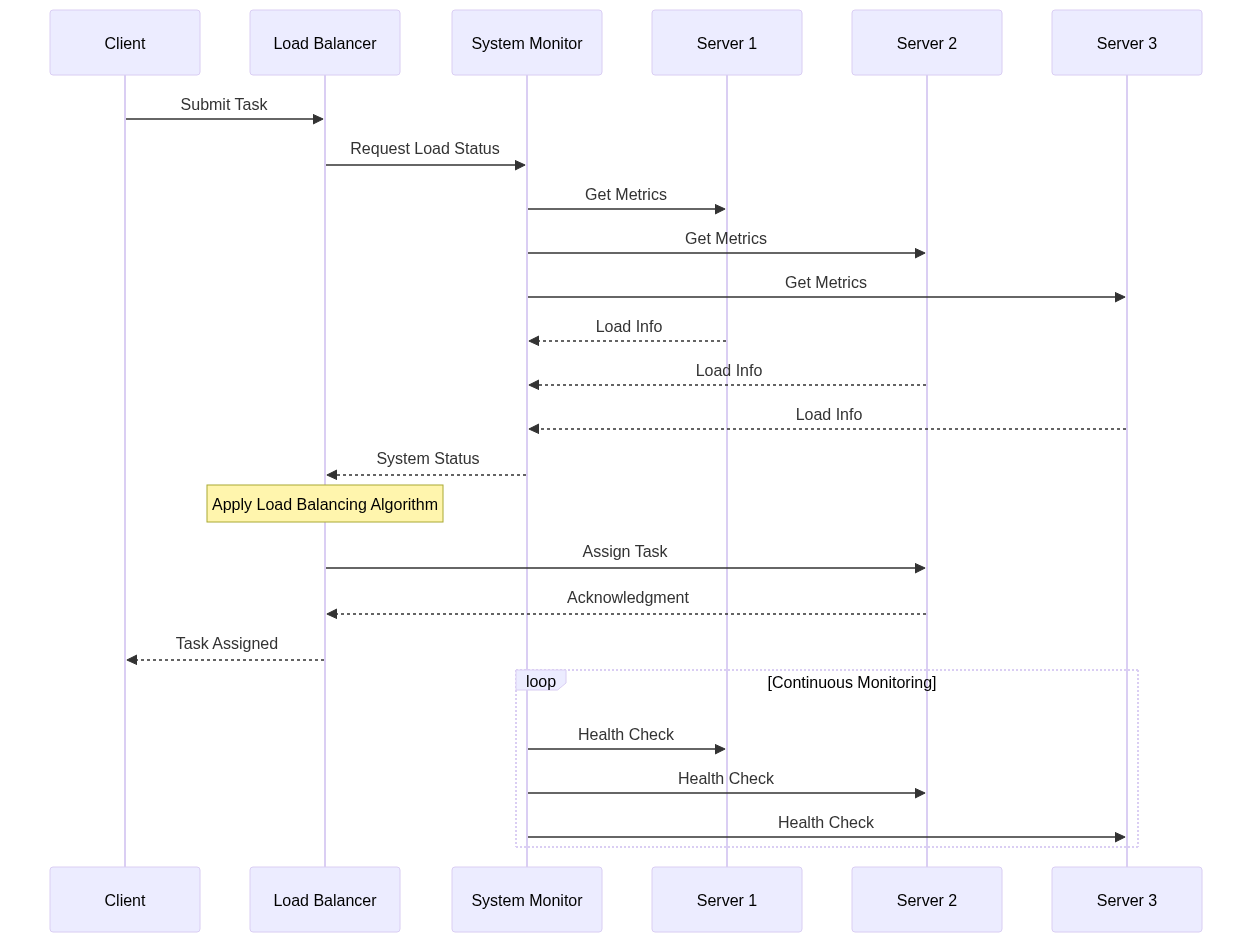

4. System Architecture

The system architecture for load balancing typically includes several components, such as a load balancer, system monitor, and multiple servers. These components work together to distribute tasks and monitor system performance.

In this architecture, the load balancer coordinates with the system monitor to gather load information from the servers. Based on this information, the load balancer assigns tasks to the least loaded server. The system monitor continuously checks the health of the servers, ensuring that the load balancer has up-to-date information for making decisions.

5. Performance Optimization

Key considerations for optimizing load balancer performance:

-

Response Time: The time taken to process and assign incoming tasks to appropriate servers. This includes the overhead of load calculation and decision-making algorithms. Reducing response time is crucial for ensuring that tasks are executed promptly and that the system remains responsive under heavy load.

-

Resource Utilization: The efficiency of resource usage across all servers. This involves maintaining balanced load distribution while considering server capabilities and current workload. Effective resource utilization ensures that system resources are used optimally, preventing resource contention and improving overall system performance.

-

Scalability: The system’s ability to handle increasing workload by adding more resources or servers dynamically without degrading performance. Scalability is essential for systems that need to handle growing workloads, such as web servers and cloud computing platforms.

By optimizing these factors, load balancers can ensure that tasks are executed efficiently and that system resources are utilized optimally, even under heavy load conditions.

6. Monitoring and Metrics

Essential metrics for load balancer monitoring:

-

Server Health: Continuous monitoring of server status including CPU usage, memory utilization, network latency, and error rates to make informed load balancing decisions. Monitoring server health helps identify potential issues before they impact system performance.

-

Queue Length: The number of pending tasks waiting to be assigned to servers. This helps in identifying bottlenecks and adjusting load balancing strategies. A high queue length might indicate that the system is under heavy load and that additional resources are needed.

-

Distribution Pattern: Analysis of how tasks are distributed across servers over time, helping identify potential improvements in the load balancing algorithm. Understanding the distribution pattern helps ensure that tasks are distributed evenly across servers, preventing any single server from becoming a bottleneck.

These metrics are crucial for maintaining the performance and reliability of a load-balanced system. By continuously monitoring these metrics, system administrators can make informed decisions about load balancing strategies and resource allocation.

7. Conclusion

Advanced load balancing is a complex but crucial aspect of modern computing systems. Understanding and implementing sophisticated scheduling strategies and load balancing algorithms is essential for building high-performance, scalable systems. By distributing tasks evenly across servers and continuously monitoring system performance, load balancers can ensure that tasks are executed efficiently and that system resources are utilized optimally.