Day 69: Advanced Process Scheduling: Techniques for Real-Time and Priority-Based Task Management

Table of Contents

- Introduction

- Scheduler Architecture

- Priority Management

- Task Queue

- Real-time Scheduling

- Implementation

- Load Balancing

- Performance Optimization

- Monitoring and Analysis

- Conclusion

1. Introduction

Advanced process scheduling, particularly real-time scheduling, is crucial for modern operating systems. This article explores sophisticated implementations of process scheduling mechanisms, focusing on real-time constraints, priority management, and efficient task queuing. Real-time scheduling ensures that tasks with strict deadlines are executed promptly, making it essential for time-sensitive applications like robotics, automotive systems, and multimedia processing.

2. Scheduler Architecture

The scheduler architecture is built around priority-based queuing, real-time constraints, and load balancing. Priority-based queuing ensures that high-priority tasks are executed first, while real-time constraints guarantee that tasks meet their deadlines. Load balancing distributes tasks across CPUs to optimize performance and resource utilization. The system supports both real-time and normal tasks, with mechanisms for preemption and cache affinity.

Core components include:

- Priority-based Queuing: Sophisticated queue management for different priority levels. The implementation supports both real-time and normal task scheduling with proper preemption.

- Real-time Constraints: Mechanisms for handling deadline-based scheduling and guaranteed execution times. The system implements earliest deadline first (EDF) and rate monotonic scheduling (RMS).

- Load Balancing: Advanced load distribution across multiple CPUs with consideration for cache affinity and power efficiency.

#include <linux/module.h>

#include <linux/kernel.h>

#include <linux/sched.h>

#include <linux/sched/rt.h>

#include <linux/cpumask.h>

#include <linux/slab.h>

#include <linux/rbtree.h>

#include <linux/list.h>

#define MAX_PRIO_LEVELS 140

#define RT_PRIO_BASE 100

#define DEFAULT_TIMESLICE (100 * HZ / 1000) // 100ms

struct rt_task_data {

struct task_struct *task;

unsigned long deadline;

unsigned long period;

unsigned long execution_time;

int priority;

struct rb_node node;

struct list_head list;

};

struct rt_rq {

struct rb_root_cached tasks_timeline;

struct list_head *priority_queues;

unsigned int nr_running;

raw_spinlock_t lock;

struct cpumask cpus_allowed;

unsigned long total_runtime;

};

struct scheduler_stats {

atomic64_t schedule_count;

atomic64_t preemptions;

atomic64_t migrations;

struct {

atomic_t count;

atomic64_t total_runtime;

} priority_levels[MAX_PRIO_LEVELS];

};

static struct rt_rq *rt_rq_array;

static struct scheduler_stats stats;

static int init_rt_scheduler(void)

{

int cpu;

rt_rq_array = kmalloc_array(num_possible_cpus(),

sizeof(struct rt_rq),

GFP_KERNEL);

if (!rt_rq_array)

return -ENOMEM;

for_each_possible_cpu(cpu) {

struct rt_rq *rt_rq = &rt_rq_array[cpu];

int i;

rt_rq->tasks_timeline = RB_ROOT_CACHED;

rt_rq->priority_queues = kmalloc_array(MAX_PRIO_LEVELS,

sizeof(struct list_head),

GFP_KERNEL);

if (!rt_rq->priority_queues)

goto cleanup;

for (i = 0; i < MAX_PRIO_LEVELS; i++)

INIT_LIST_HEAD(&rt_rq->priority_queues[i]);

raw_spin_lock_init(&rt_rq->lock);

rt_rq->nr_running = 0;

cpumask_clear(&rt_rq->cpus_allowed);

rt_rq->total_runtime = 0;

}

return 0;

cleanup:

while (--cpu >= 0)

kfree(rt_rq_array[cpu].priority_queues);

kfree(rt_rq_array);

return -ENOMEM;

}

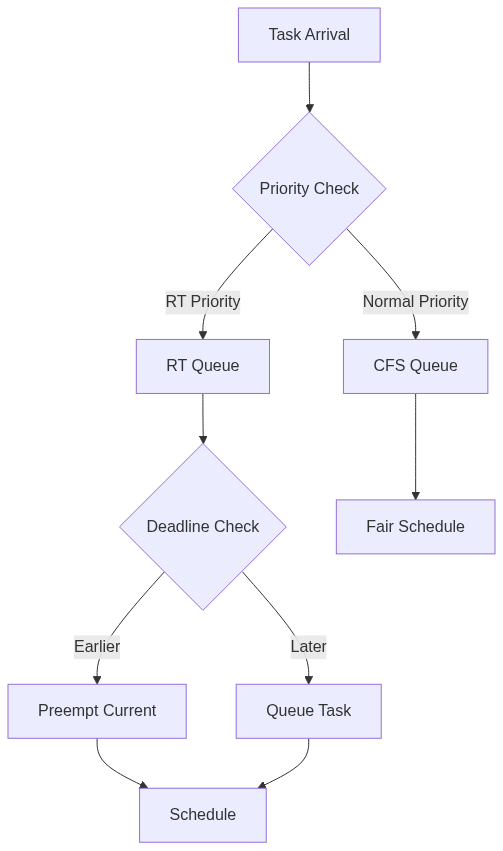

Flow of Scheduling

3. Priority Management

Priority management is critical for ensuring that high-priority tasks are executed before lower-priority ones. The scheduler uses a multi-level priority queue, where tasks are grouped by priority levels. Real-time tasks are assigned higher priorities, ensuring they are scheduled before normal tasks. The system also supports dynamic priority adjustment, allowing priorities to be updated based on task behavior or system load.

4. Task Queue

The task queue implementation uses red-black trees and linked lists for efficient task management. Red-black trees are used to manage tasks based on their deadlines, while linked lists handle tasks within the same priority level. This combination ensures fast insertion, deletion, and retrieval of tasks, even under high load. The system also supports preemption, allowing high-priority tasks to interrupt lower-priority ones.

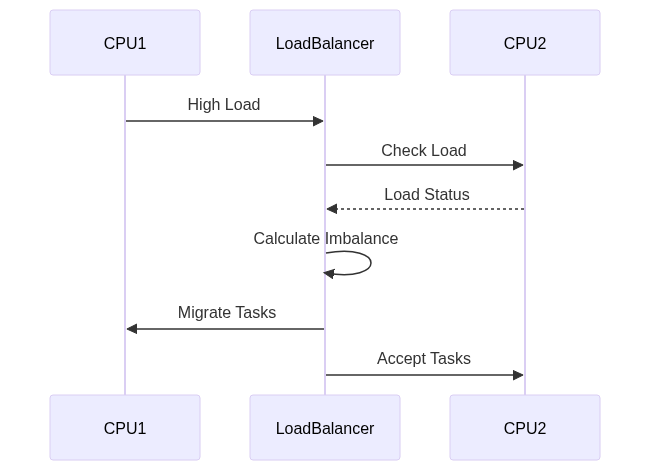

CPU Load Distribution

5. Real-time Scheduling

Real-time scheduling ensures that tasks meet their deadlines. The scheduler implements Earliest Deadline First (EDF) and Rate Monotonic Scheduling (RMS) algorithms. EDF schedules tasks with the earliest deadlines first, while RMS assigns priorities based on task periods. These algorithms are combined with preemption and context switching to ensure timely execution of real-time tasks.

static struct rt_task_data *create_rt_task(struct task_struct *task,

unsigned long deadline,

unsigned long period)

{

struct rt_task_data *rt_task;

rt_task = kmalloc(sizeof(*rt_task), GFP_KERNEL);

if (!rt_task)

return NULL;

rt_task->task = task;

rt_task->deadline = deadline;

rt_task->period = period;

rt_task->execution_time = 0;

rt_task->priority = task->prio;

RB_CLEAR_NODE(&rt_task->node);

INIT_LIST_HEAD(&rt_task->list);

return rt_task;

}

static void insert_rt_task(struct rt_rq *rt_rq,

struct rt_task_data *rt_task)

{

struct rb_node **link = &rt_rq->tasks_timeline.rb_root.rb_node;

struct rb_node *parent = NULL;

struct rt_task_data *entry;

unsigned long flags;

raw_spin_lock_irqsave(&rt_rq->lock, flags);

while (*link) {

parent = *link;

entry = rb_entry(parent, struct rt_task_data, node);

if (rt_task->deadline < entry->deadline)

link = &parent->rb_left;

else

link = &parent->rb_right;

}

rb_link_node(&rt_task->node, parent, link);

rb_insert_color(&rt_task->node, &rt_rq->tasks_timeline.rb_root);

list_add(&rt_task->list, &rt_rq->priority_queues[rt_task->priority]);

rt_rq->nr_running++;

raw_spin_unlock_irqrestore(&rt_rq->lock, flags);

}

6. Implementation

The implementation begins with defining the rt_task_data structure, which represents a real-time task. This structure includes the task’s deadline, period, execution time, and priority. The rt_rq structure manages the run queue for real-time tasks, using red-black trees and priority queues. The init_rt_scheduler function initializes the scheduler, allocating memory for run queues and setting up data structures.

#include <linux/module.h>

#include <linux/kernel.h>

#include <linux/sched.h>

#include <linux/sched/rt.h>

#include <linux/cpumask.h>

#include <linux/slab.h>

#include <linux/rbtree.h>

#include <linux/list.h>

#define MAX_PRIO_LEVELS 140

#define RT_PRIO_BASE 100

#define DEFAULT_TIMESLICE (100 * HZ / 1000) // 100ms

struct rt_task_data {

struct task_struct *task;

unsigned long deadline;

unsigned long period;

unsigned long execution_time;

int priority;

struct rb_node node;

struct list_head list;

};

struct rt_rq {

struct rb_root_cached tasks_timeline;

struct list_head *priority_queues;

unsigned int nr_running;

raw_spinlock_t lock;

struct cpumask cpus_allowed;

unsigned long total_runtime;

};

struct scheduler_stats {

atomic64_t schedule_count;

atomic64_t preemptions;

atomic64_t migrations;

struct {

atomic_t count;

atomic64_t total_runtime;

} priority_levels[MAX_PRIO_LEVELS];

};

static struct rt_rq *rt_rq_array;

static struct scheduler_stats stats;

static int init_rt_scheduler(void)

{

int cpu;

rt_rq_array = kmalloc_array(num_possible_cpus(),

sizeof(struct rt_rq),

GFP_KERNEL);

if (!rt_rq_array)

return -ENOMEM;

for_each_possible_cpu(cpu) {

struct rt_rq *rt_rq = &rt_rq_array[cpu];

int i;

rt_rq->tasks_timeline = RB_ROOT_CACHED;

rt_rq->priority_queues = kmalloc_array(MAX_PRIO_LEVELS,

sizeof(struct list_head),

GFP_KERNEL);

if (!rt_rq->priority_queues)

goto cleanup;

for (i = 0; i < MAX_PRIO_LEVELS; i++)

INIT_LIST_HEAD(&rt_rq->priority_queues[i]);

raw_spin_lock_init(&rt_rq->lock);

rt_rq->nr_running = 0;

cpumask_clear(&rt_rq->cpus_allowed);

rt_rq->total_runtime = 0;

}

return 0;

cleanup:

while (--cpu >= 0)

kfree(rt_rq_array[cpu].priority_queues);

kfree(rt_rq_array);

return -ENOMEM;

}

7. Load Balancing

Load balancing ensures that tasks are evenly distributed across CPUs. The scheduler uses a work-stealing algorithm, where idle CPUs steal tasks from busy ones. The load_balance_rt function migrates tasks between CPUs based on load and priority. This approach minimizes CPU idle time and ensures efficient resource utilization.

struct load_balance_info {

int src_cpu;

int dst_cpu;

unsigned long imbalance;

struct cpumask *cpus;

};

static int load_balance_rt(struct rt_rq *src_rq,

struct rt_rq *dst_rq,

struct load_balance_info *info)

{

struct rt_task_data *rt_task, *tmp;

unsigned long flags;

int migrated = 0;

if (!spin_trylock_irqsave(&src_rq->lock, flags))

return 0;

list_for_each_entry_safe(rt_task, tmp,

&src_rq->priority_queues[rt_task->priority],

list) {

if (migrated >= info->imbalance)

break;

if (can_migrate_task(rt_task->task, info->dst_cpu)) {

deactivate_task(src_rq, rt_task);

set_task_cpu(rt_task->task, info->dst_cpu);

activate_task(dst_rq, rt_task);

migrated++;

}

}

spin_unlock_irqrestore(&src_rq->lock, flags);

return migrated;

}

8. Performance Optimization

Performance optimization focuses on minimizing context switching and cache misses. The scheduler uses cache affinity to keep tasks on the same CPU, reducing cache reload overhead. It also implements batch scheduling, where multiple tasks are scheduled in a single context switch. The update_curr_rt function tracks task execution time, ensuring accurate scheduling decisions.

static void update_curr_rt(struct rt_rq *rt_rq)

{

struct task_struct *curr = current;

struct rt_task_data *rt_task;

u64 delta_exec;

unsigned long flags;

if (!curr || !rt_task_running(curr))

return;

delta_exec = rq_clock_task(rq_of(curr)) - curr->se.exec_start;

raw_spin_lock_irqsave(&rt_rq->lock, flags);

rt_task = curr->rt_task_data;

if (rt_task) {

rt_task->execution_time += delta_exec;

rt_rq->total_runtime += delta_exec;

}

raw_spin_unlock_irqrestore(&rt_rq->lock, flags);

}

9. Monitoring and Analysis

The scheduler includes a monitoring system to track performance metrics like deadline misses, preemptions, and migrations. The rt_metrics structure stores these metrics, which are updated during task execution. This data is used to analyze scheduler performance and identify bottlenecks.

struct rt_metrics {

atomic64_t deadline_misses;

atomic64_t preemptions;

atomic64_t migrations;

struct {

atomic_t running;

atomic64_t total_runtime;

atomic_t deadline_misses;

} priority_stats[MAX_PRIO_LEVELS];

};

static struct rt_metrics metrics;

static void update_rt_metrics(struct rt_task_data *rt_task,

unsigned long runtime)

{

if (runtime > rt_task->deadline)

atomic64_inc(&metrics.deadline_misses);

atomic64_add(runtime,

&metrics.priority_stats[rt_task->priority].total_runtime);

}

10. Conclusion

Advanced process scheduling requires careful consideration of real-time constraints, priority management, and load balancing. The provided implementation demonstrates practical approaches to building a robust scheduling system suitable for modern operating systems.