Understanding Nginx Worker Architecture

Table of Contents

- Introduction

- Core Architecture Overview

- Process Management and Worker Lifecycle

- Memory Architecture and Resource Management

- Connection Handling and Event Processing

- Performance Optimization and Scaling

- Practical Implementation

- Conclusion

- References and Further Reading

Introduction

NGINX has revolutionized web server architecture since its inception in 2004. At its core, NGINX’s success lies in its innovative approach to handling concurrent connections through an event-driven, asynchronous architecture. This deep dive explores the intricate details of NGINX’s worker architecture, focusing on how it achieves remarkable performance and scalability.

Core Architecture Overview

The foundation of NGINX’s architecture revolves around a master-worker model. Unlike traditional web servers that create new processes or threads for each connection, NGINX employs a more sophisticated approach. The master process, which runs with root privileges, serves as the orchestrator of the entire system. It’s responsible for critical tasks such as reading and validating configuration, managing worker processes, and handling signals.

What makes NGINX particularly interesting is its worker process design. When NGINX starts, the master process creates worker processes based on the configuration or automatically detects the optimal number based on available CPU cores. These worker processes run with lower privileges, typically under a dedicated user account, enhancing security through privilege separation.

Process Management and Worker Lifecycle

The relationship between master and worker processes is fascinating. When NGINX initializes, the master process reads the configuration and creates worker processes through a fork() system call. This fork operation creates exact copies of the master process, but these copies (workers) immediately begin their specialized roles.

Worker processes go through distinct lifecycle phases:

- Initialization: Workers set up their event processing mechanisms and prepare resource pools.

- Active Processing: Managing connections and handling requests.

- Graceful Shutdown: Completing existing requests before termination.

The beauty of this design lies in its simplicity and effectiveness. Each worker operates independently, maintaining its own connection pool and event loop. This independence eliminates the need for complex inter-process communication and reduces contention for shared resources.

Memory Architecture and Resource Management

NGINX’s memory architecture is designed for efficiency and predictability. Instead of allocating memory for each connection, NGINX uses a pooled memory approach. Memory pools are pre-allocated chunks of memory that workers use for various operations. This approach significantly reduces memory fragmentation and improves performance by minimizing system calls for memory allocation.

The memory management system is closely tied to the operating system’s virtual memory subsystem. NGINX takes advantage of modern operating system features like memory mapping and shared memory segments. When a worker process needs to access static files, it uses memory mapping to efficiently serve content without excessive copying between kernel and user space.

Connection Handling and Event Processing

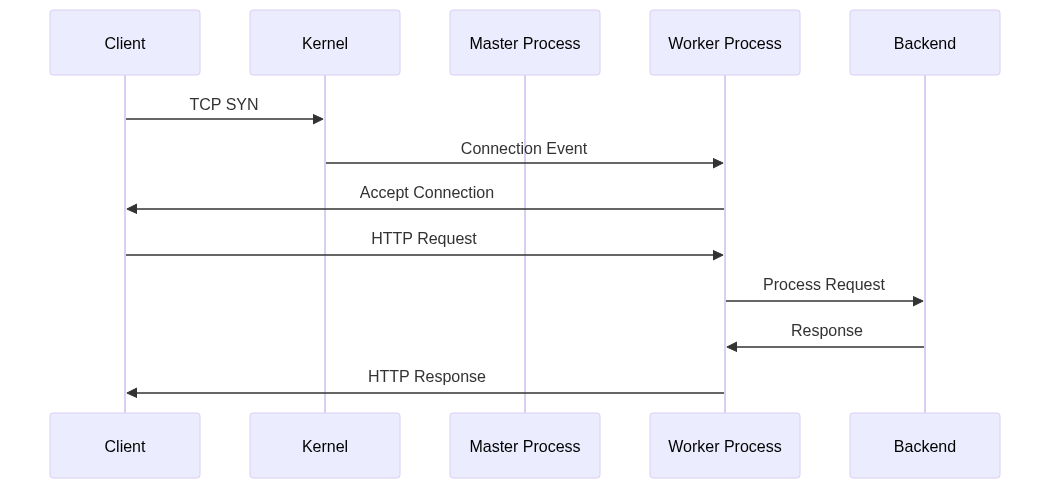

Perhaps the most crucial aspect of NGINX’s architecture is its connection handling mechanism. When a client initiates a connection, the operating system’s network stack handles the initial TCP handshake. The connection then moves through several stages:

- The SYN queue holds incomplete connections (during the TCP handshake).

- The accept queue maintains fully established connections waiting to be processed by NGINX.

Worker processes use advanced event notification mechanisms like epoll (Linux), kqueue (BSD), or similar system-specific implementations to efficiently monitor these connections.

What makes this system particularly efficient is its event-driven nature. Instead of dedicating a thread or process to each connection, workers use non-blocking I/O operations and event notifications to handle thousands of connections simultaneously. This approach minimizes context switching and memory overhead, allowing NGINX to maintain high performance even under heavy load.

Performance Optimization and Scaling

NGINX’s worker architecture naturally lends itself to excellent performance optimization. The system takes advantage of modern CPU architectures through worker process CPU affinity. By binding each worker to a specific CPU core, NGINX minimizes context switching and improves CPU cache utilization.

The scaling model is equally elegant. Horizontal scaling is achieved by adjusting the number of worker processes, while vertical scaling involves optimizing resource allocation and connection limits per worker. This flexibility allows NGINX to efficiently utilize available system resources, whether on a small VPS or a large multi-core server.

Connection Processing Pipeline

The way NGINX processes connections deserves special attention. When a connection is established, it goes through a sophisticated pipeline that demonstrates the elegance of NGINX’s architecture. The worker process that accepts the connection becomes solely responsible for its entire lifecycle. This single-responsibility principle ensures efficient resource utilization and prevents contention between workers.

Inside each worker, connections flow through several processing stages:

- Transport Layer: Raw TCP stream processing.

- SSL/TLS Layer: Encryption and decryption without blocking other connections.

- HTTP Layer: Incremental request parsing and response generation.

This streaming approach significantly reduces latency and memory usage, especially for large requests or responses.

Event Loop Architecture

At the heart of each worker process lies an event loop, which represents one of NGINX’s most sophisticated architectural elements. Unlike traditional thread-per-request servers, NGINX’s event loop allows each worker to handle thousands of connections simultaneously without the overhead of thread context switching.

The event loop operates on a simple yet powerful principle: instead of blocking on I/O operations, workers register interest in specific events (like data becoming available to read or a socket becoming writable) and then process these events as they occur.

Memory Management and Resource Optimization

NGINX’s memory management strategy goes beyond simple pooling. The system employs a hierarchical approach to memory allocation, with different types of allocators optimized for different use cases. Short-lived allocations use fast pool allocators, while longer-lived structures might use more traditional malloc-based allocation.

The system also implements sophisticated buffer management. Instead of allocating fixed-size buffers for each connection, NGINX uses a dynamic buffer chain approach. This allows efficient handling of both small and large requests without wasting memory.

State Management and Shared Resources

One of the most intricate aspects of NGINX’s architecture is how it manages state across worker processes. While workers operate independently, they sometimes need to share information. This is handled through shared memory segments, which are carefully designed to minimize contention and ensure consistency.

Error Handling and Resilience

NGINX’s error handling architecture is built around the principle of graceful degradation. When a worker process encounters an error, it’s designed to impact only the connections that worker is handling. The master process monitors worker health and can restart failed workers without disrupting other connections.

Conclusion

NGINX’s worker architecture represents a masterful balance of performance, scalability, and reliability. By deeply understanding operating system primitives and carefully designing around them, NGINX achieves remarkable efficiency without sacrificing stability or security. The event-driven, multi-process architecture provides a robust foundation for handling modern web traffic patterns.

The success of this architecture is evident in NGINX’s widespread adoption, from small personal websites to some of the world’s largest web platforms. Understanding these architectural principles is crucial for anyone working with web infrastructure, as they represent fundamental patterns in high-performance server design.

References and Further Reading

- “NGINX Architecture Guide” - Official Documentation

- “Systems Performance: Enterprise and the Cloud” - Brendan Gregg